Why Most AI Chatbots Fail in Customer Support and How to Fix It

AI chatbots are now common in customer support, but most still fail to deliver real value. Users often face dead-end conversations, while support teams deal with escalations the bots couldn’t handle. This guide explains why most chatbots fall short and how to fix them.

Problems

-

They’re pointed at the wrong problems. Teams launch bots broadly instead of starting with a small set of high-impact, well-scoped topics and scenarios. Authoritative guidance recommends identifying the specific tasks users actually ask for, categorizing scenarios (informational, task completion, troubleshooting), and designing a minimal conversation tree you can validate and iterate. (1)

-

Weak intent design and fallbacks. Brittle or overly broad intents miss user goals; poor disambiguation and missing fallbacks lead to loops and dead ends. Leading conversational design playbooks emphasize robust intent coverage, disambiguation prompts, and guardrails, while cloud contact-center guides stress graceful fallback patterns. (2, 3)

-

No context or system integration. Bots often lack access to CRMs, order systems, or prior case history, so answers feel generic and agents can’t continue seamlessly. Integration best practices recommend connecting bots to back-end systems to personalize the experience and passing context to live agents to avoid customers repeating themselves. (3)

-

Poor conversation UX and expectation setting. Unclear bot capabilities, inconsistent tone, and missing “what I can do” onboarding cause abandonment. UX guidance for conversational agents calls for clearly conveying capabilities, defining the bot persona, and designing for interruptions and multi-turn flows. (4)

-

Lack of omnichannel continuity. Customers switch between chat, voice, email, and social; many bots can’t maintain context or hand off smoothly across channels. Contact-center reference architectures highlight digital-first experiences with virtual agents across SMS, social, chat, email, and voice. (5)

-

No reliable human handoff. Bots that can’t escalate while preserving history frustrate users and increase handle time. Virtual agent frameworks position automation as first-line support with configurable handoff to human agents; the experience assumes smooth escalation, not bot-only resolution. (6)

-

Fragile data and knowledge operations. Hallucinations, outdated answers, and broken flows come from weak knowledge/versioning and limited observability. Large-scale conversational teams show why detailed logs and fault-tolerant pipelines are necessary to debug and evolve chatbots safely. (7)

-

Missing measurement and governance. Teams ship bots without success metrics (containment, CSAT, time-to-resolution, escalation quality) or feedback loops to improve topics/intents. Practitioner playbooks repeatedly tie success to iterative design, instrumentation, and measurement. (8)

How to Fix It

- Start with the right scope.

- Pick 5–10 high-volume, high-value use cases where you can deliver complete outcomes (password reset, order status, refund eligibility).

- Map scenarios and decision points; ship a minimal conversation tree; validate with real users, then iterate. (1)

- Engineer intents and fallbacks like you mean it.

- Use narrow, well-named intents with example utterances; add clarification prompts when signals are low.

- Implement graceful fallback tiers (clarify → rephrase → escalate). A well-regarded “Do’s and Don’ts” checklist keeps teams honest about guardrails. (2)

- Wire the bot into your systems of record.

- Connect to CRM, order, billing, identity, and case systems; personalize with known context (channel, user state).

- On handoff, pass the transcript and collected entities so the human doesn’t restart the conversation. Integration playbooks call this out as essential to reduce repetition. (3)

- Design the conversation experience.

- Onboarding: explicitly tell users what the bot can and can’t do; offer quick action chips.

- Persona and tone: consistent, concise, and helpful; document it.

- Handle interruptions and digressions; support multi-turn flows. Well-documented UX and bot design guides cover these patterns. (4)

- Build for omnichannel from day one.

- Keep state across channels; ensure policies for when to stay self-serve vs. when to switch to voice or live chat.

- Use platforms that support SMS, social, chat, email, and voice with AI virtual agents, following the reference architectures in 5.

- Treat escalation as a feature.

- Define clear, measurable escalation rules (confidence thresholds, sentiment, task eligibility).

- Make human agents “continuation points,” not restarts; this is how leading virtual agent providers expect escalation to work. (6)

- Operationalize data, knowledge, and reliability.

- Establish a knowledge lifecycle: source of truth, review cadence, versioning, and automated freshness checks.

- Add structured logs and replay tools for conversational journeys; large-scale reference implementations show why this matters in production. (7)

- Instrument what matters.

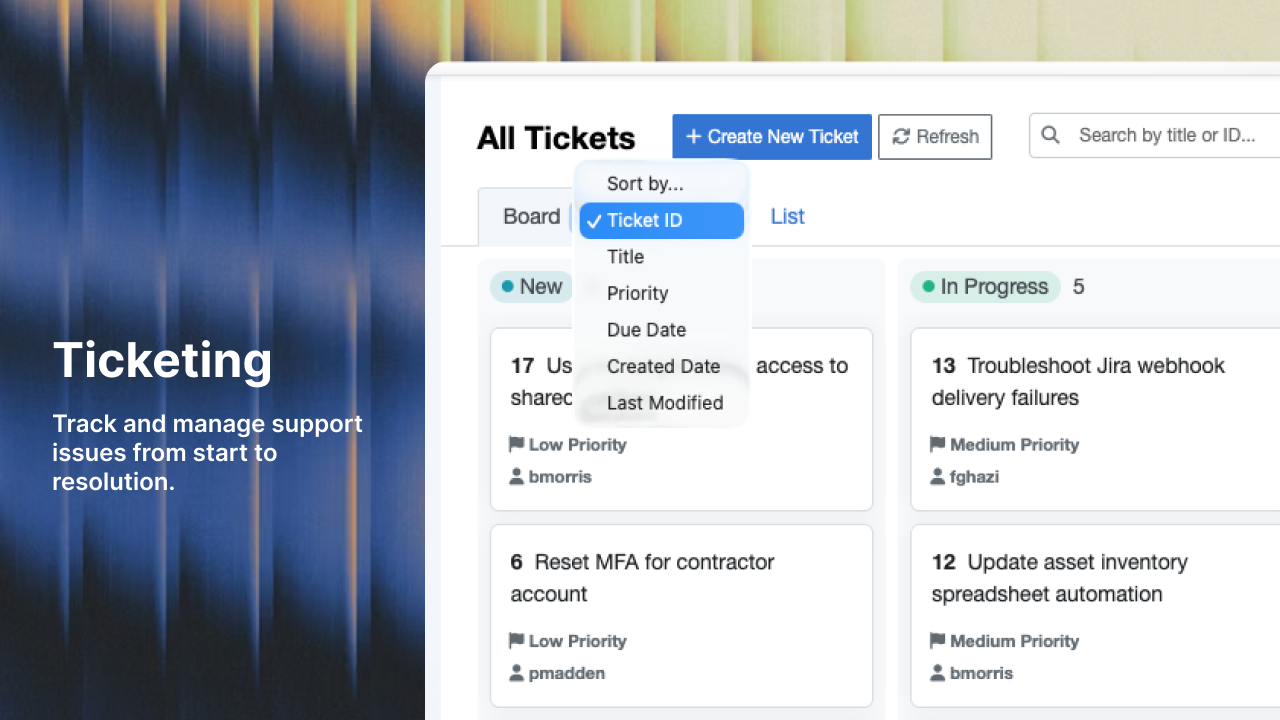

- Core KPIs: containment (useful self-service), successful task completion, average time-to-resolution, assisted handoff quality, CSAT, and cost-to-serve.

- Run topic-level A/Bs (prompting, pathways, UI variants) and iterate continuously; an evidence-based approach emphasized across practitioner best-practice docs. (8)

- Platform choices and architecture (when you grow).

- The platforms in 6 and 3 support virtual agents, analytics, and native handoff patterns for contact centers.

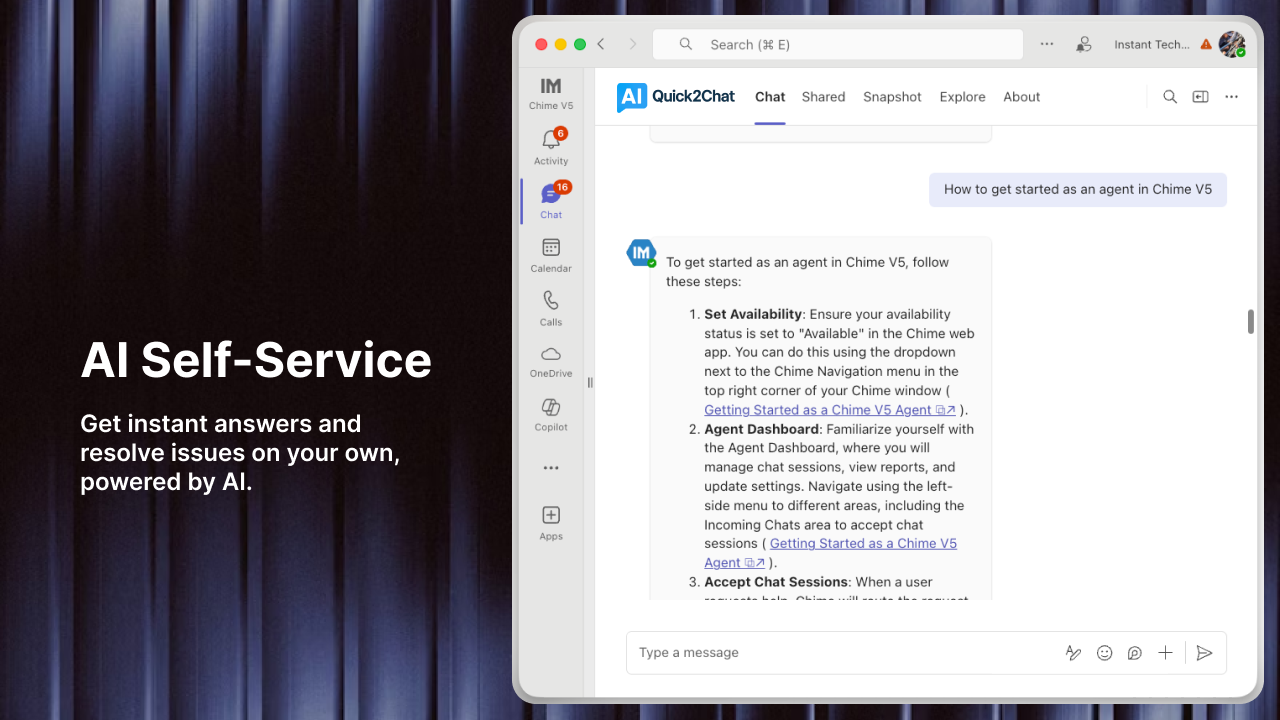

- The tooling in 9 offers integrated customer service and agent collaboration patterns.

- The assistants described in 2 and 10 provide strong enterprise intent design guidance and multichannel approaches.

Implementation Checklist (90 Days)

Weeks 1–2: Strategy & scope

- Pull top 20 intents from support logs; pick 5–10 to automate end-to-end.

- Draft conversation trees and fallback rules. (1)

Weeks 3–6: Build the MVP

- Connect CRM/order/identity; implement context passing on human handoff. (3)

- Ship clear onboarding (“Here’s what I can do”), define persona and tone, and support interruptions. (4)

- Enable at least two channels (e.g., web + voice) with unified state. (5)

Weeks 7–10: Reliability & knowledge ops

- Add structured logging, redaction, and replay for failed paths; stand up a knowledge review cadence. (7)

Weeks 11–13: Measure & iterate

- Track containment, task completion, handoff quality, CSAT; A/B test prompts and flows; expand topics based on evidence. (8)

Sources

- Microsoft: conversation design & bot UX, topic scoping, and best practices. (Microsoft Learn)

- Google (Alphabet): Contact Center AI virtual agents & API best practices (Apigee). (Google Cloud)

- Amazon (AWS): customer service automation best practices; Amazon Connect + Lex for generative CX. (Amazon Web Services, Inc.)

- Salesforce: fault-tolerant data pipelines and observability for chatbots. (Salesforce Engineering Blog)

- IBM: why virtual assistants fail and how to avoid it. (IBM Developer)

- Oracle: intent modeling dos & don’ts; Digital Assistant guide. (Oracle Documentation)

- Cisco: omnichannel, AI virtual agents across channels (Webex Contact Center). (Cisco)